Prompting in the age of LLMs

🌈 Abstract

The article discusses the concept of prompt engineering, which is the practice of crafting and optimizing input prompts to effectively use large language models (LLMs) for a wide variety of applications. It covers the key elements of a prompt, general tips for designing prompts, and different types of prompting techniques such as zero-shot, few-shot, chain-of-thought, and ReAct prompting.

🙋 Q&A

[01] What is Prompt and Prompt Engineering?

1. What is a prompt? A prompt is a specific set of inputs provided by the user that guide LLMs to generate an appropriate response or output for a given task or instruction. The output will be based on the existing knowledge the LLMs has.

2. What is prompt engineering? Prompt engineering refers to the practice of crafting and optimizing input prompts to effectively use LLMs for a wide variety of applications. The quality of prompts that you provide to LLMs can impact the quality of their responses.

[02] Elements of a Prompt

1. What are the key elements of a prompt? A prompt may contain the following elements:

- Instruction: Task or instruction for the model (e.g., classify, summarize, etc.)

- Input: Input statement or question for the model to generate a response.

- Context: Additional relevant information to guide the model's response (e.g., examples to help the model better understand the task).

- Output Format: Specific type or format of the output generated by the model.

[03] General Tips for Designing Prompts

1. What are some general tips for designing effective prompts?

- Be clear and concise, avoiding ambiguous language.

- Format prompts properly, separating the input, instruction, context, and output directions.

- Focus on what the model needs to do, rather than what it should not do.

- Provide examples to articulate the desired output format.

- Control the length of the output by explicitly requesting it in the prompt.

[04] Types of Prompting Techniques

1. What is zero-shot prompting? Zero-shot prompting refers to the ability of an AI model to generate meaningful responses or complete tasks without any prior training on specific prompts. The larger and more capable the LLM, the better the results of zero-shot prompting.

2. What is few-shot prompting? In contrast to zero-shot prompting, few-shot prompting involves training an AI model with only a small amount of data or examples (also called "shots"). This technique allows the model to quickly adapt and generate responses based on limited examples, plus the instructions provided by the user.

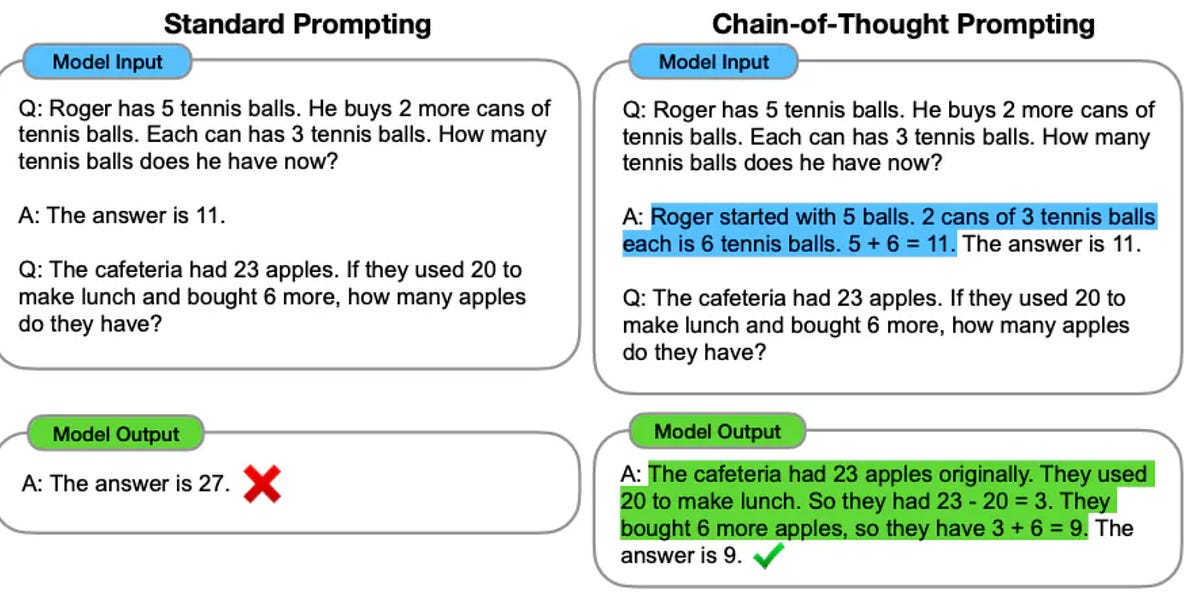

3. What is chain-of-thought (CoT) prompting? Chain-of-Thought (CoT) prompting is a technique that breaks down complex tasks through intermediate reasoning steps. This allows LLMs to overcome difficulties with some reasoning tasks that require logical thinking and multiple steps to solve, such as arithmetic or commonsense reasoning questions.

4. What is ReAct prompting? ReAct prompting allows language models to generate verbal reasoning traces and text actions concurrently. Actions receive feedback from the external environment, while reasoning traces update the model's internal state by reasoning over the context and incorporating useful information for future reasoning and action.