Using LLMs for Evaluation

🌈 Abstract

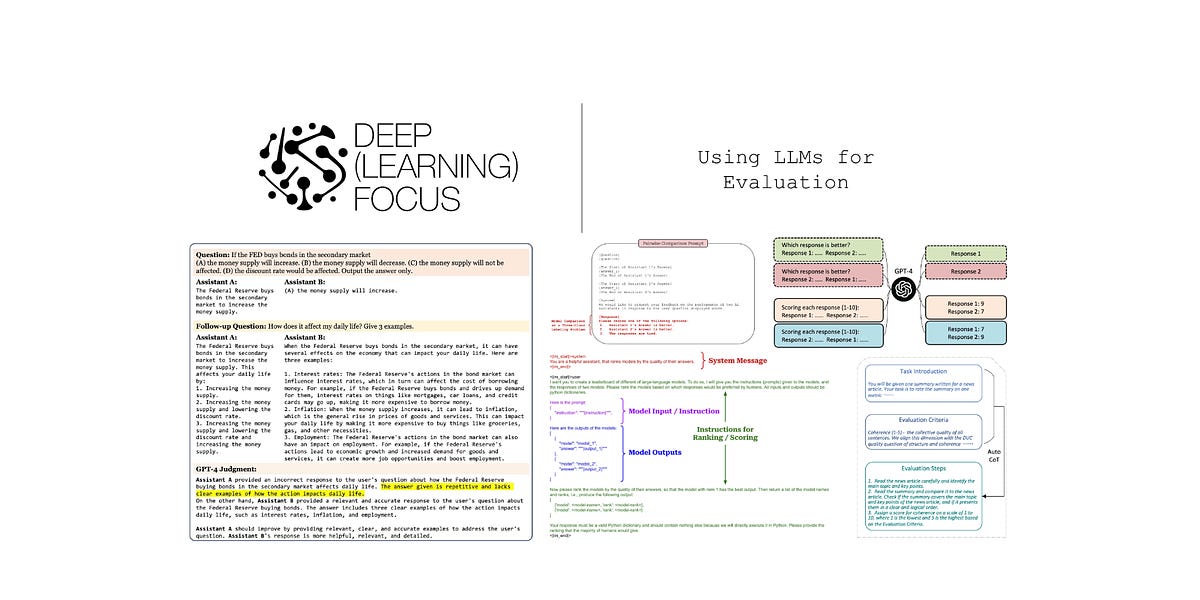

The article discusses the use of large language models (LLMs) as evaluators for other LLMs, a technique commonly referred to as "LLM-as-a-Judge". It covers the motivation behind this approach, the different setups and prompting strategies used, the strengths and limitations of LLM-based evaluations, and various analyses and insights from related research papers.

🙋 Q&A

[01] Motivation and Overview of LLM-as-a-Judge

1. What are the key motivations behind using LLMs as evaluators for other LLMs?

- Traditional evaluation metrics like ROUGE and BLEU tend to break down when evaluating the diverse, open-ended outputs of modern LLMs.

- Human evaluation, while valuable, is noisy, time-consuming, and expensive, which impedes the ability to iterate quickly during model development.

- LLM-as-a-Judge provides a scalable, reference-free, and explainable way to approximate human preferences in a cost-effective manner.

2. What are the different setups and prompting strategies used for LLM-based evaluations?

- Pairwise comparison: The judge is presented with a question and two model responses and asked to identify the better response.

- Pointwise scoring: The judge is given a single response to a question and asked to assign a score, e.g., using a Likert scale.

- Reference-guided scoring: The judge is given a reference solution in addition to the question and response(s) to help with the scoring process.

- These techniques can be combined with chain-of-thought (CoT) prompting to improve scoring quality.

3. What are some of the key strengths and limitations of LLM-as-a-Judge evaluations?

- Strengths:

- Scalable and generic, applicable to a wide variety of open-ended tasks.

- Highly correlated with human preferences when using powerful LLM judges like GPT-4.

- Provides human-readable rationales to explain the judge's scores.

- Limitations:

- Introduces various biases, such as position bias, verbosity bias, and self-enhancement bias.

- Struggles with grading complex reasoning and math questions.

- Susceptible to being misled by incorrect information in the context.

[02] Detailed Analysis of LLM-as-a-Judge

1. How do early works like Vicuna and Guanaco leverage LLM-as-a-Judge for evaluation?

- Vicuna uses GPT-4 to rate the quality of its own outputs and those of other models on a scale of 1-10, providing detailed explanations for the scores.

- Guanaco uses GPT-4 to perform both pairwise comparisons and pointwise scoring of model outputs, finding that GPT-4 evaluations correlate well with human preferences.

2. What are the key insights from the in-depth analysis of LLM-as-a-Judge in the LLM-as-a-Judge publication?

- LLM judges like GPT-4 can achieve 80% agreement with human preferences, matching the agreement rate between human annotators.

- However, LLM judges exhibit various biases, such as position bias, verbosity bias, and self-enhancement bias.

- Techniques like position switching, few-shot examples, and using multiple LLM judges can help mitigate these biases.

3. How does the AlpacaEval framework leverage LLM-as-a-Judge for automated model evaluation?

- AlpacaEval uses a fixed set of 805 instructions to evaluate instruction-following models, with an LLM judge (e.g., GPT-4-Turbo) rating the quality of each model's output.

- It employs techniques like randomizing the position of model outputs and measuring logprobs to reduce length bias in the evaluations.

- The length-controlled version of AlpacaEval is found to have a 0.98 Spearman correlation with human ratings from the Chatbot Arena.

[03] Other Related Research

1. What are some of the other ways researchers have explored using LLMs as evaluators?

- Training specialized LLM judges, such as Prometheus, to improve the reliability and explainability of the evaluation process.

- Using LLM-as-a-Judge techniques to generate synthetic preference data for Reinforcement Learning from AI Feedback (RLAIF), which can then be used to train more aligned models.

2. What are the key takeaways from the paper that analyzes the use of LLMs as an alternative to human evaluation?

- LLMs can be a viable alternative to human evaluation, with GPT-4 and ChatGPT demonstrating the ability to reliably assess text quality and provide meaningful feedback.

- However, LLM evaluations should be used in conjunction with human evaluation, as LLMs can also exhibit biases and limitations.

</output_format>