Virtual try-all: Visualizing any product in any personal setting

🌈 Abstract

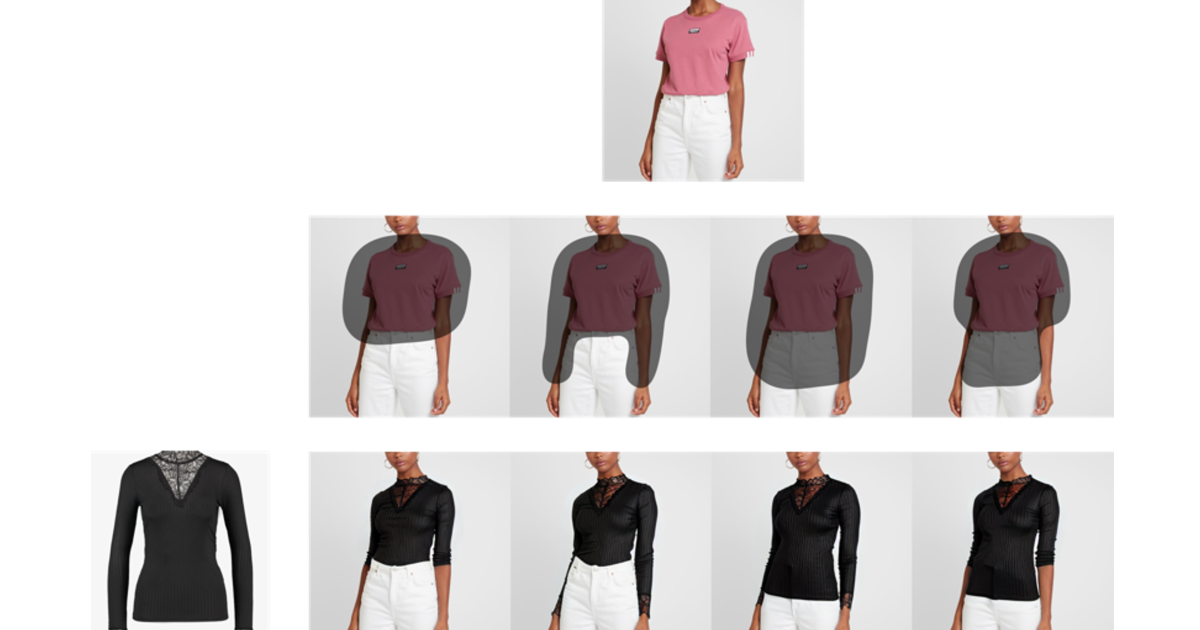

The article presents a novel generative AI model called Diffuse-to-Choose (DTC) that allows users to seamlessly insert any product into any personal scene. DTC is the first model to address the "virtual try-all" problem, which is more general than the traditional "virtual try-on" problem, as it works across a wide range of product categories.

🙋 Q&A

[01] Virtual Try-All: Visualizing any product in any personal setting

1. What is the virtual try-all problem, and how does it differ from the traditional virtual try-on problem?

- The virtual try-all problem is more general than the virtual try-on problem, as it allows users to insert any product into any personal setting, rather than just trying on clothes.

- Unlike virtual try-on, virtual try-all does not require 3D models or multiple views of the product, and it can work with "in the wild" images like regular cellphone pictures, not just sanitized, white-background, or professional-studio-grade images.

2. What are the key characteristics of the Diffuse-to-Choose (DTC) model?

- DTC is the first model to address the virtual try-all problem, as opposed to the virtual try-on problem.

- It is a single model that works across a wide range of product categories.

- It does not require 3D models or multiple views of the product, just a single 2D reference image.

- It can work with "in the wild" images, not just sanitized, white-background, or professional-studio-grade images.

- It is fast, cost-effective, and scalable, generating an image in approximately 6.4 seconds on a single AWS g5.xlarge instance.

[02] Technical Details of the Diffuse-to-Choose Model

1. What is the underlying architecture of the Diffuse-to-Choose model?

- Diffuse-to-Choose is an inpainting latent-diffusion model, with architectural enhancements to preserve products' fine-grained visual details.

- It uses a U-Net encoder-decoder model, with a primary U-Net encoder and a secondary U-Net encoder.

- The secondary encoder takes a crude copy-paste collage of the product image inserted into the mask, and its output is used as a "hint signal" to preserve the product's fine-grained details.

- The hint signal and the output of the primary U-Net encoder are passed to a feature-wise linear-modulation (FiLM) module, which aligns the features of the two encodings before passing them to the U-Net decoder.

2. How was the Diffuse-to-Choose model trained and evaluated?

- The model was trained on AWS p4d.24xlarge instances with NVIDIA A100 40GB GPUs, using a dataset of a few million pairs of public images.

- It was evaluated on the virtual try-all task and compared to four different versions of a traditional image-conditioned inpainting model, as well as the state-of-the-art model on the virtual try-on task.

- Evaluation metrics included CLIP (contrastive language-image pretraining) score and Fréchet inception distance (FID), which measure similarity and realism/diversity of generated images.

- On the virtual try-all task, DTC outperformed the inpainting baselines on both metrics, with a 9% margin in FID over the best-performing baseline.

- On the virtual try-on task, DTC performed comparably to the specialized baseline, which is a substantial achievement given its generality.

Shared by Daniel Chen ·

© 2024 NewMotor Inc.