An introduction to Large Language Models

🌈 Abstract

An introduction to Large Language Models (LLMs), including their technical details, current challenges, and future opportunities.

🙋 Q&A

[01] Large Language Models

1. What is the backbone of Large Language Models?

- The backbone of Large Language Models is the artificial neural network (ANN), which is inspired by the human brain.

- ANNs have interconnected artificial neurons with parameters defining their connections, and learning occurs through backpropagation, which compares the model's output to the desired output.

2. What is the specific ANN architecture behind LLMs?

- The specific ANN architecture behind LLMs is called a "Transformer" architecture, which was developed in 2017.

- Transformers use an "attention" mechanism to identify the most crucial parts of the input sequence, allowing them to handle large amounts of context without internal memory.

3. What is the standard training approach for LLMs?

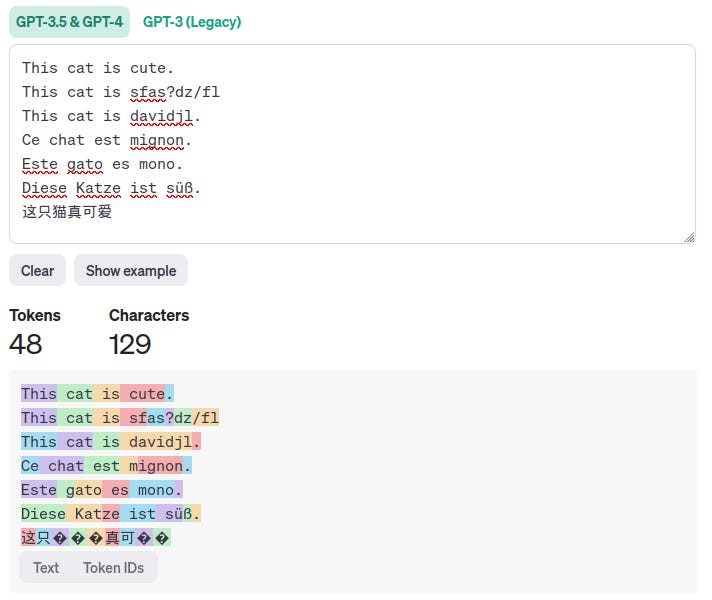

- The standard training approach for LLMs is next token prediction, where the model is given most of a sentence and asked to predict the next word.

- LLMs operate on "tokens" instead of words, to take characters and word segments into account.

- To train these large models, AI companies have used datasets of sentences gathered from the vast public internet, with GPT-3 having a training set of over 500 billion tokens.

[02] Challenges Facing LLMs

1. What are the issues related to bias in LLMs?

- One of the largest and inherent issues in language models is bias, which is what the models use to make accurate predictions.

- The data used to train these models comes from the public internet, so it contains biases that are prevalent on the internet, such as gender stereotypes and cultural prejudices.

- Mitigating bias is challenging, as defining a universal standard for what is considered good or appropriate is difficult.

2. What is the issue of hallucinations in LLMs?

- LLMs can generate text that is false while still sounding factual, as they are only predicting the next token without fact-checking mechanisms.

- These false statements are often referred to as hallucinations, and efforts to resolve this issue, such as Retrieval-Augmented Generation (RAG), are still work in progress.

3. What are the challenges related to the reliance on public internet data for training LLMs?

- The reliance on publicly available internet data for training LLMs creates challenges, as future generative AI models may not offer significant performance improvements without new technological breakthroughs.

- The public internet may become filled with more AI-generated content, potentially decreasing the performance of these models.

- Utilizing copyrighted internet data also raises legal concerns, leading to lawsuits against AI companies.

[03] Future Opportunities and Challenges

1. What are the potential future applications of LLMs?

- LLMs are now being used in a variety of applications, from assisting users in finding information on websites to offering medical advice based on scans and images.

- A clear direction for future generative AI applications is linking LLMs with other software, such as internet search or voice recognition, to create more seamless and natural interactions.

2. What are the key challenges that need to be addressed as LLMs are integrated into more applications?

- The challenges of bias, hallucinations, plateauing performance, and legal issues will need to be addressed as LLMs are integrated into more applications.

Shared by Daniel Chen ·

© 2024 NewMotor Inc.