What I've Learned Building Interactive Embedding Visualizations

🌈 Abstract

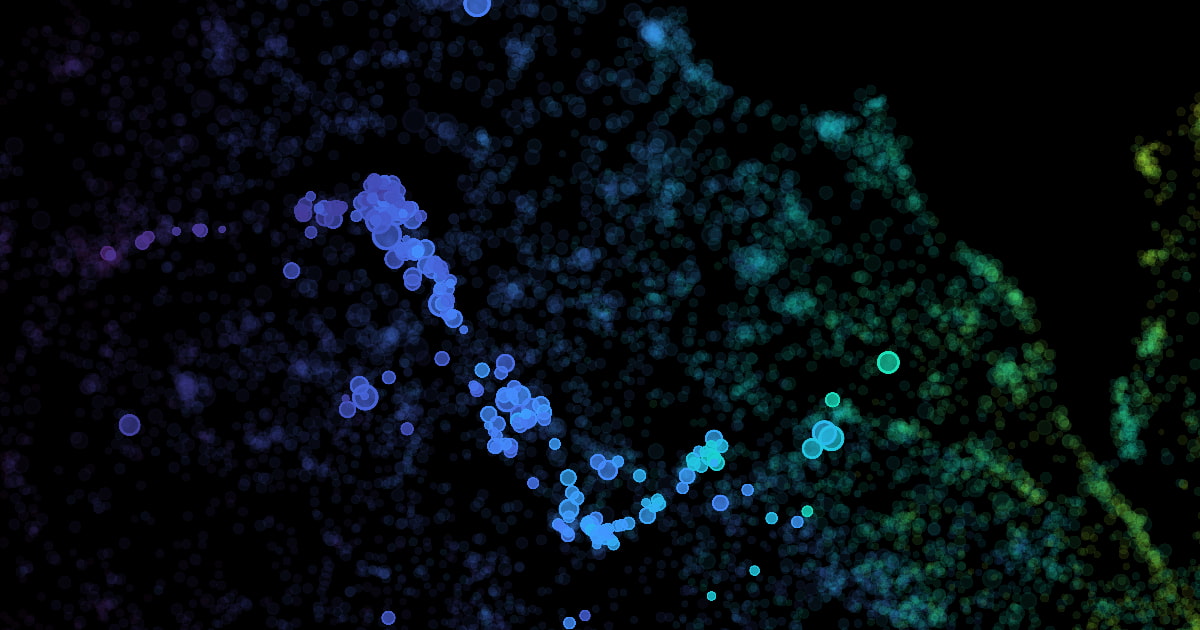

The article discusses the author's experience in building interactive embedding visualizations, which are tools for exploring and understanding relationships between entities (e.g. words, products, people) represented as points in a high-dimensional space. The author shares their process for creating these visualizations, including:

- Collecting and preprocessing the data to create a co-occurrence matrix

- Using the PyMDE library to generate an initial embedding

- Projecting the high-dimensional embedding down to 2D using algorithms like UMAP and t-SNE

- Implementing the interactive visualization as a web application using libraries like Pixi.JS or custom WebGL/WebGPU rendering

The article highlights the benefits of these embedding visualizations for exploring hidden relationships and breaking through the "black box" of recommendation algorithms, as well as the author's personal enjoyment in browsing and exploring these interactive spaces.

🙋 Q&A

[01] Building the Co-occurrence Matrix

1. What is the purpose of the co-occurrence matrix in the embedding generation process? The co-occurrence matrix is a key step in the process, as it represents the relationships between the entities being embedded. It is a square matrix where each cell represents the co-occurrence count between two entities in the source data.

2. How does the author address the computational challenges of building a large co-occurrence matrix?

The author uses the numba library to JIT-compile the matrix generation code, which can provide significant performance improvements. They also experiment with using sparse matrix representations from the scipy.sparse library to reduce memory usage for very large datasets.

3. What are some techniques the author uses to filter or preprocess the raw data before building the co-occurrence matrix? The author mentions dropping "very rarely seen or otherwise 'uninteresting' entities" from the dataset to reduce the cardinality and improve the quality of the resulting visualization. They also discuss weighting the co-occurrence values based on domain-specific information about the entities (e.g. for the osu! beatmap embedding, weighting by performance point similarity).

[02] Generating the Embedding

1. Why does the author find that directly embedding the co-occurrence matrix does not produce good results? The author notes that directly embedding the co-occurrence matrix results in "elliptical galaxies" with a dense mass of high-degree entities at the center and little other discernible structure. This is because the raw co-occurrence matrix does not adequately address the issues of edge weighting and sparsity.

2. How does the author use the PyMDE library to preprocess the co-occurrence matrix before generating the final embedding?

The author uses the pymde.preserve_neighbors function from PyMDE, which computes a k-nearest neighbor graph from the co-occurrence matrix and returns a sparser, unweighted graph. This helps address the issues with the raw co-occurrence matrix and prepares it for the final embedding generation.

3. What are some of the key parameters the author explores when using PyMDE and the downstream projection algorithms (UMAP, t-SNE)?

For PyMDE, the author focuses on the n_neighbors and embedding_dim parameters, experimenting to find the right balance between preserving local and global structure. For the 2D projection algorithms, the author highlights the importance of parameters like n_neighbors and min_dist in UMAP, and the tradeoffs between local and global structure preservation in UMAP vs. t-SNE.

[03] Building the Visualization

1. Why does the author prefer to build the visualizations as web applications? The author cites the benefits of web applications, such as cross-platform support, GPU acceleration via WebGL/WebGPU, and easy distribution via shareable links that anyone can access on any device.

2. What are the advantages and disadvantages the author observes when using SVG vs. WebGL/WebGPU-powered libraries like Pixi.JS for rendering the visualizations? The author found that SVG-based visualizations struggled with performance issues when rendering large (>10k entities) interactive embeddings, due to layout calculations and browser-level limitations. In contrast, rendering hundreds of thousands of circles on the GPU using Pixi.JS or custom WebGL/WebGPU code was much more performant.

3. How does the author integrate the generated 2D embedding coordinates with entity metadata when building the final visualization? The author mentions joining the 2D embedding coordinates with the entity metadata and storing the combined data in a single static file (e.g. JSON or a custom binary format) for efficient loading in the web-based visualization.