- Your AI Product Needs Evals

🌈 Abstract

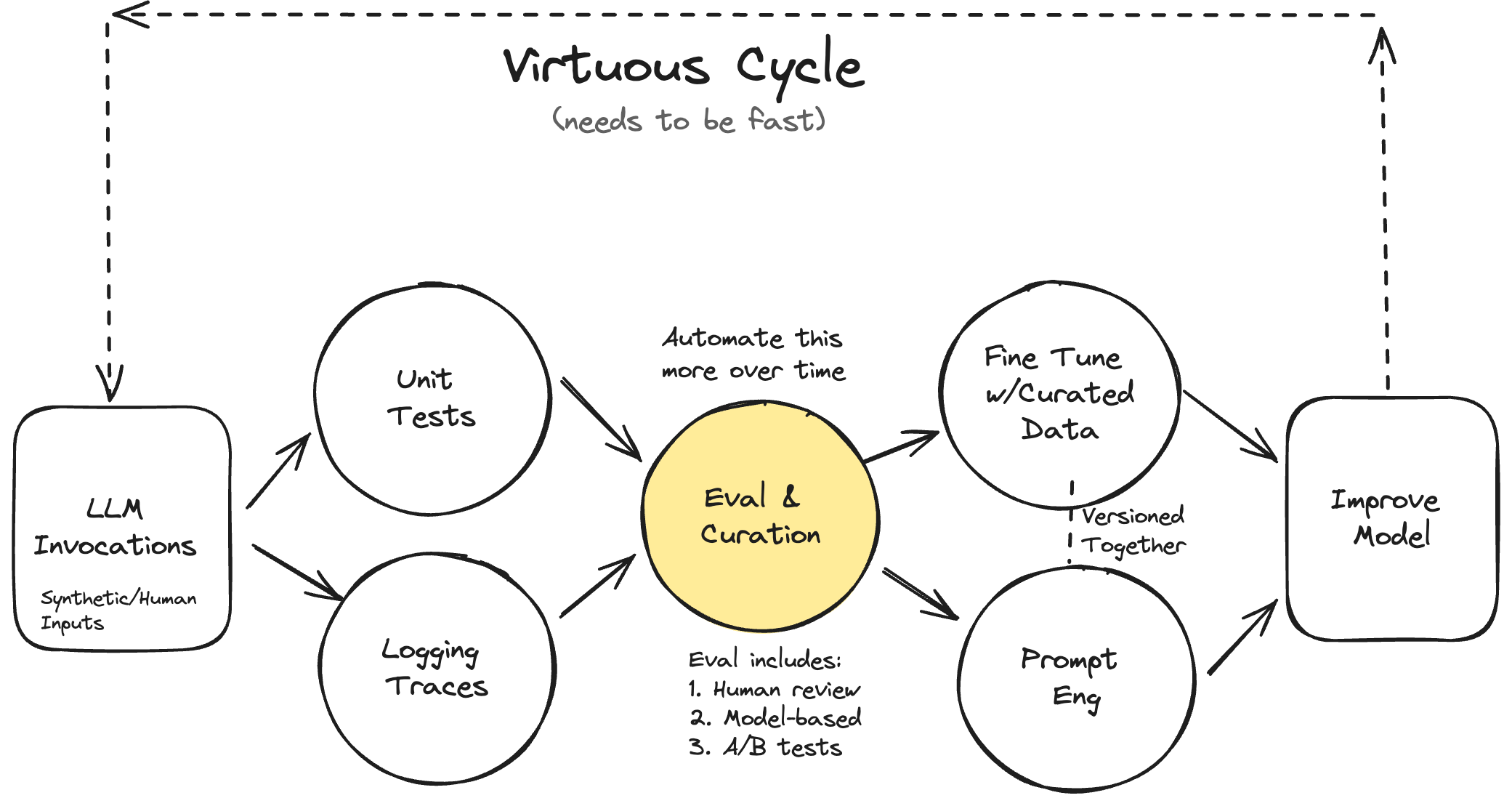

The article discusses the importance of building robust evaluation systems for AI products, particularly language models (LLMs). It highlights the common pitfall of unsuccessful AI products - a failure to create effective evaluation systems. The article presents a case study of building an AI assistant, Lucy, for a real estate SaaS application, Rechat, and the systematic approach used to improve the AI through rigorous evaluation.

🙋 Q&A

[01] Iterating Quickly == Success

1. What are the three key activities that differentiate great from mediocre AI products?

- Evaluating quality (e.g., tests)

- Debugging issues (e.g., logging & inspecting data)

- Changing the behavior or the system (e.g., prompt engineering, fine-tuning, writing code)

2. How does streamlining the evaluation process help with other activities? Streamlining the evaluation process makes all other activities, such as changing the behavior or the system, easier. This is similar to how tests in software engineering pay dividends in the long term despite requiring upfront investment.

[02] The Types of Evaluation

1. What are the three levels of evaluation discussed in the article?

- Level 1: Unit Tests

- Level 2: Model & Human Eval (including debugging)

- Level 3: A/B testing

2. How do the costs of these evaluation levels compare? The cost of Level 3 > Level 2 > Level 1, which dictates the cadence and manner in which they are executed.

3. What is the balance to be struck when introducing each level of testing? The balance is between getting user feedback quickly, managing user perception, and the goals of the AI product, which is not too dissimilar from the balancing act for products more generally.

[03] Level 1: Unit Tests

1. How are unit tests for LLMs different from typical unit tests? Unit tests for LLMs are assertions, like those written in pytest, that should be organized for use in places beyond unit tests, such as data cleaning and automatic retries during model inference.

2. What are the steps outlined for writing effective unit tests for LLMs?

- Write scoped tests by breaking down the scope of the LLM into features and scenarios.

- Create test cases, often using an LLM to generate synthetic inputs.

- Run and track the tests regularly as part of the development process.

3. Why is it important not to skip the unit test step, even as the product matures? Unit tests are crucial for getting feedback quickly when iterating on the AI system, and many people eventually outgrow their unit tests and move on to other levels of evaluation as their product matures.

[04] Case Study: Lucy, A Real Estate AI Assistant

1. What were the symptoms of the performance plateau experienced by Lucy, the AI assistant?

- Addressing one failure mode led to the emergence of others, resembling a game of whack-a-mole.

- There was limited visibility into the AI system's effectiveness across tasks beyond "vibe checks".

- Prompts expanded into long and unwieldy forms, attempting to cover numerous edge cases and examples.

2. How did the team at Rechat approach the problem of systematically improving Lucy? The team created a systematic approach to improving Lucy centered on evaluation, with a focus on the three levels of evaluation: unit tests, model & human evaluation, and A/B testing.

</output_format>