Hallucinations, Errors, and Dreams

🌈 Abstract

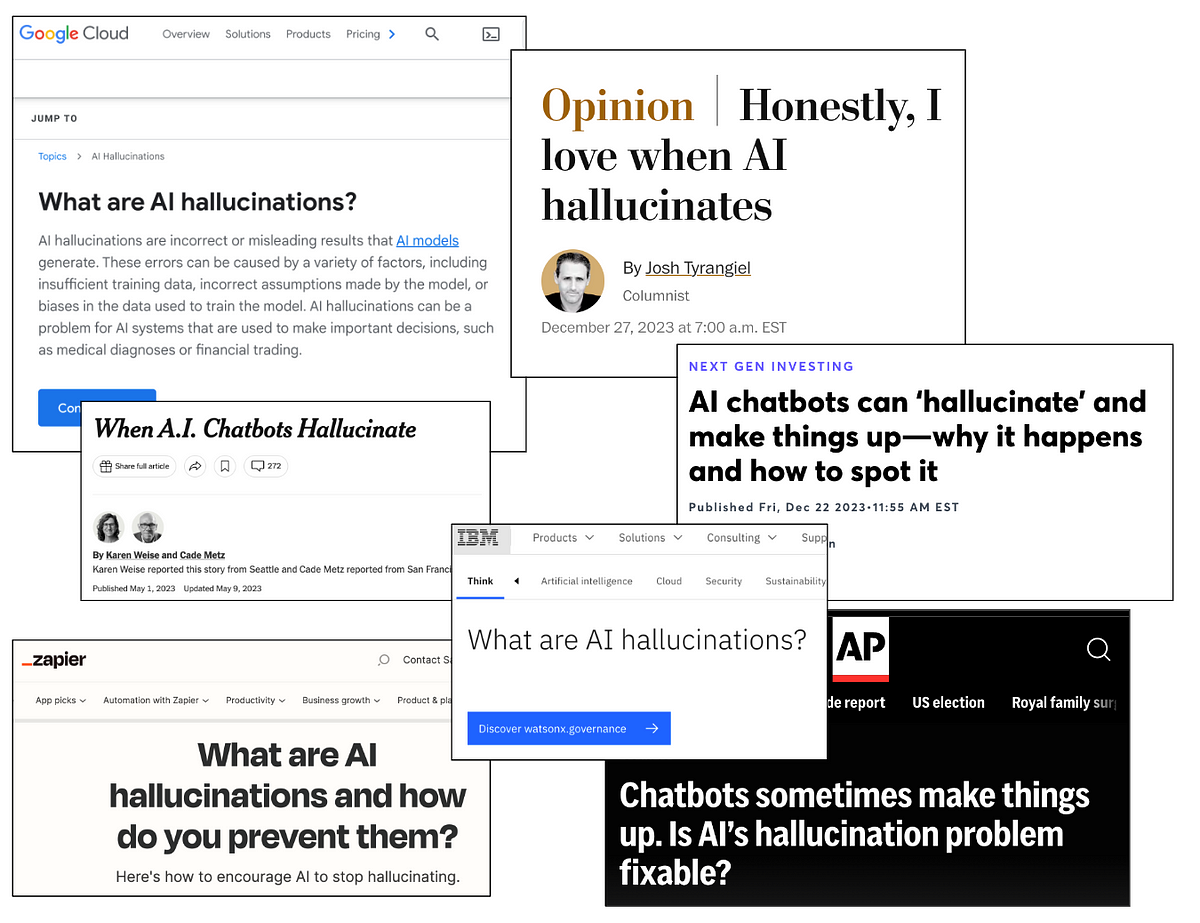

The article discusses the phenomenon of "hallucinations" in modern AI systems, particularly large language models (LLMs) like ChatGPT. It explores why these systems produce false outputs, the differences between hallucinations and errors in classical machine learning, and the challenges in quantifying and measuring the prevalence of hallucinations.

🙋 Q&A

[01] A crash course in Machine Learning

1. What is the key difference between the machine learning approach and the classical approach of solving problems using first principles? The key difference is that machine learning models do not try to understand the underlying principles or processes that generate the data. Instead, they look for patterns in the data and use those patterns to make predictions, without necessarily needing to understand the underlying mechanisms.

2. How are machine learning models like classifiers typically trained? Classifiers are trained using a supervised learning approach. A dataset of labeled examples is provided, and the model learns to map the inputs to the corresponding labels by optimizing its parameters to minimize the error on the training data.

3. How does the training process for large language models (LLMs) like GPT differ from classical classifiers? LLMs are trained using a self-supervised approach, where the model is trained to predict the next word in a sequence of text, rather than to classify discrete labels. This allows the model to be trained on much larger datasets without the need for manual labeling.

[02] The difference between a hallucination and an error

1. What is the key difference between errors made by classical machine learning models and the "hallucinations" produced by generative AI systems like ChatGPT? The key difference is that classical models have a well-defined task with clear right and wrong answers, whereas generative AI systems are asked to produce open-ended text without a single correct answer. This makes it much harder to define and measure "errors" in the output of generative AI.

2. Why does the author argue that all generative AI output could be considered a "hallucination"? The author argues that since generative AI systems are trained to reconstruct or "fill in" missing parts of existing text or images, their output is fundamentally a "hallucination" or dream-like reconstruction, rather than a factual representation of the real world.

3. What are the implications of viewing hallucinations as a fundamental property of generative AI, rather than just occasional errors? If hallucinations are a fundamental property, it may mean that the prevalence of hallucinations cannot be easily reduced by simply collecting more data or making the models larger. The author suggests that new training approaches or architectures may be needed to address the hallucination problem.

[03] On the risks of bad outputs

1. Why is it important to not only know the frequency of errors, but also the specific types of errors made by a model? The author argues that the cost and impact of different types of errors can vary greatly, so simply knowing an overall "error rate" is not sufficient. The specific nature of the errors is crucial for determining whether a model is suitable for a given application.

2. What are the key challenges in trying to measure the "hallucination rate" of generative AI systems? The author identifies three main challenges: 1) lack of agreement on the definition of a "hallucination", 2) the infeasibility of manually evaluating large volumes of model output, and 3) the fact that benchmark datasets may not be representative of real-world usage.

3. What approach does the author recommend for evaluating the reliability of a generative AI system for a specific use case? The author suggests creating a dataset of representative prompts and manually evaluating the model's responses to identify the types and frequencies of desirable and undesirable outputs. This bespoke evaluation is recommended over relying on general "hallucination rate" benchmarks.