AI Doomerism as Science Fiction

🌈 Abstract

The article discusses the debate around AI doomerism, the belief that advanced AI systems pose an existential risk to humanity. The author argues against the doomers' perspective, suggesting that the overall case against AI doomerism is strong even if individual arguments may be weak.

🙋 Q&A

[01] AI Doomerism as Science Fiction

1. What is the author's main argument against AI doomerism?

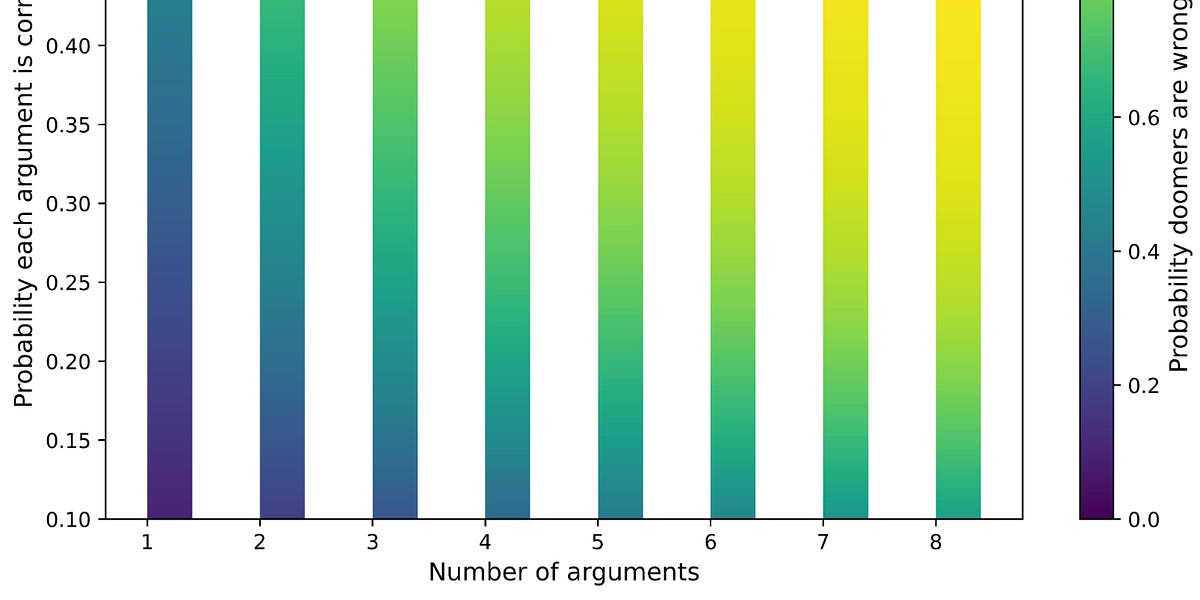

- The author's main argument is that for AI doomerism to be wrong, skeptics only need to be correct about one of the arguments against it, and the whole case falls apart. Even if each individual argument has a low probability of being true, the overall probability that doomers are wrong is high.

2. What are some of the arguments the author considers against AI doomerism, and what probabilities does the author assign to them?

- The author lists 8 arguments against AI doomerism and assigns arbitrary probabilities to them:

- Massive diminishing returns to intelligence, so a super AI won't have a huge advantage (25%)

- Alignment is a relatively trivial problem (30%)

- Intelligent agents don't necessarily become power-seeking (25%)

- AI will be benevolent (10%)

- Super AI will find it useful to negotiate with and keep humans around (10%)

- Super AIs will be able to check and balance each other (10%)

- Research into AI will stagnate indefinitely (35%)

- We're fundamentally mistaken about the nature of intelligence (35%)

3. How does the author assess the overall probability of AI doomerism being correct?

- Based on the probabilities assigned to the 8 arguments, the author calculates an 88% chance that doomers are wrong.

- The author also considers the possibility that even if a solution exists, our politics won't allow us to reach it (40% likely).

- Combining these factors, the author estimates a 4% chance that AI is an existential risk and we can do something about it.

[02] Comparison to the Fermi Paradox

1. How does the author compare the issue of AI doomerism to the Fermi paradox and the supposed solution of alien visitations?

- The author sees parallels between the arguments for AI doomerism and the claims of alien visitations to explain the Fermi paradox.

- The author finds the idea of aliens visiting Earth but leaving only grainy evidence and being caught by incompetent means to be unconvincing, similar to how the author finds many of the arguments for AI doomerism to be weak.

2. What is the author's view on the Fermi paradox and the proposed solutions to it?

- The author argues that the Fermi paradox doesn't necessarily predict that any particular civilization will make itself known to us, and positing infinite civilizations doesn't solve the problem that any aliens that exist must be very far away, with infinite ways things can go wrong before they or their messages reach us.