AI Chatbots Have Thoroughly Infiltrated Scientific Publishing

🌈 Abstract

The article discusses the growing concern among scientists about the misuse of ChatGPT and other AI chatbots to produce scientific literature. It highlights the following key points:

🙋 Q&A

[01] Researchers Misusing AI Chatbots

1. What are the concerns raised by scientists about the use of AI chatbots in scientific literature?

- Scientists have identified various "tells" or signs that indicate the use of AI chatbots in published papers, such as the inclusion of phrases like "certainly, here is a possible introduction for your topic".

- These AI-generated phrases are often reasonably obvious evidence that a scientist used a large language model (LLM) chatbot.

- However, in most cases, the involvement of AI is not as clear-cut, and automated AI text detectors are unreliable tools for analyzing a paper.

- Researchers have identified certain keywords and phrases (e.g., "complex and multifaceted") that tend to appear more often in AI-generated sentences than in typical human writing.

2. What are the potential issues with relying on AI chatbots for scientific writing?

- LLMs are not yet reliable enough to trust, as they can "hallucinate" or generate inaccurate information, including fabricated citation references.

- If scientists place too much confidence in LLMs, they risk inserting AI-generated flaws into their work, further complicating the already messy reality of scientific publishing.

[02] Quantifying the Scale of AI Involvement

1. What did the analysis by researcher Andrew Gray suggest about the scale of AI involvement in scientific papers?

- Gray's analysis, released on the preprint server arXiv.org, suggests that at least 60,000 papers (slightly more than 1% of all scientific articles published globally last year) may have used an LLM.

- Other studies focusing on specific subfields, such as computer science, have found that up to 17.5% of recent papers in those areas exhibit signs of AI writing.

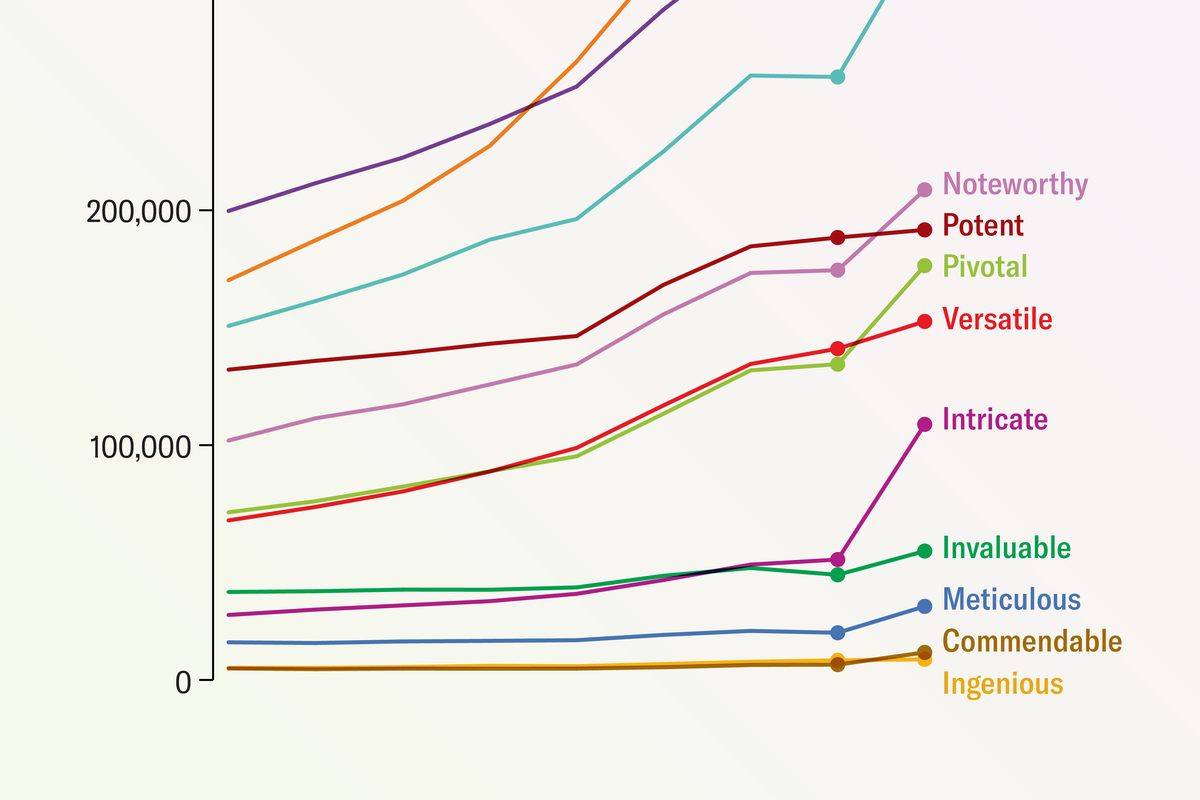

2. What did the search conducted by Scientific American find regarding the prevalence of phrases associated with AI-generated text?

- The search found that the phrase "as of my last knowledge update," which was used only once in 2020, appeared 136 times in 2022 across major paper analytics platforms.

- The search also identified an increase in the use of other stock phrases or words preferred by ChatGPT, suggesting a change in the lexicon of scientific writing that might be attributed to the presence of chatbots.

[03] Potential Implications and Concerns

1. What are the potential implications of using AI chatbots in the scientific publication process?

- Employing AI technology as a grammar or syntax helper could lead to the misapplication of AI in other parts of the scientific process, such as generating key figures or outsourcing peer reviews to automated evaluators.

- There are concerns that AI-generated judgments could creep into academic papers alongside AI-generated text, which could undermine the quality and integrity of the peer-review process.

2. What are the specific concerns raised by experts regarding the use of AI chatbots in scientific research and publication?

- Experts, including Matt Hodgkinson from the Committee on Publication Ethics, are concerned that chatbots are "not good at doing analysis," which is where the real danger lies in their use for scientific research and publication.