Language Models and Spatial Reasoning: What’s Good, What Is Still Terrible, and What Is Improving

🌈 Abstract

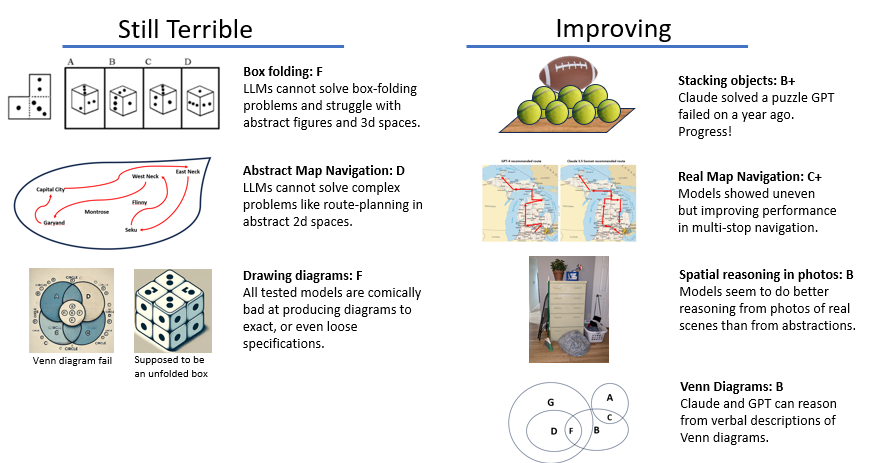

The article discusses the current state of spatial reasoning capabilities in large language models (LLMs) and the challenges they face in replicating human-level spatial reasoning. It covers the author's testing and evaluation of various LLM models, including GPT-4, Claude, and Gemini, on a range of spatial reasoning tasks. The article highlights the limitations of LLMs in areas such as mental box folding, navigation, Venn diagrams, and data visualization, while also acknowledging some progress in their ability to reason about objects in photos and generate code for data visualizations.

🙋 Q&A

[01] Spatial Reasoning in LLMs

1. What is the current state of spatial reasoning capabilities in large language models (LLMs)?

- Spatial reasoning capabilities did not emerge spontaneously in LLMs like many other reasoning capabilities did.

- LLMs have not been able to replicate the specialized, highly capable spatial reasoning capabilities that humans possess.

- However, LLMs are improving their spatial reasoning through specialized training by AI providers.

2. How have the author's testing and evaluation of LLMs on spatial reasoning tasks revealed their limitations?

- The author tested various LLM models, including GPT-4, Claude, and Gemini, on a diverse collection of spatial reasoning problems.

- The LLMs failed immediately on the easiest mental box folding problems and have not shown noticeable improvement over the past year.

- The LLMs struggled with 2D navigation tasks, Venn diagrams, and generating effective data visualizations from a given dataset.

3. What are some examples of the LLMs' performance on specific spatial reasoning tasks?

- On a mental box folding problem, the author provided the LLM with an unfolded pattern and asked what pattern would be possible when folded. The LLM's response showed it was trying to solve the problem using verbal reasoning strategies rather than true spatial reasoning.

- On a multi-city navigation task, the LLMs were able to provide a general strategy but failed to generate an efficient route.

- When asked to create Venn diagrams based on verbal descriptions, the LLMs were able to provide correct logical reasoning but struggled to generate accurate visual representations.

[02] Improvements in LLM Spatial Reasoning

1. How are LLMs improving their spatial reasoning capabilities?

- LLMs are being trained on specialized datasets and tasks to improve their spatial reasoning abilities.

- The author notes that LLMs have had to "learn to solve spatial reasoning problems the long way around, cobbling together experiences and strategies, and asking for help from other AI models."

2. What areas have LLMs shown some progress in?

- LLMs have shown improvement in their ability to reason about objects in photos, such as identifying and describing various tools in an image.

- LLMs have also demonstrated better capabilities in generating code for data visualizations, responding to specific requests and general visual requirements.

3. What are the limitations of LLMs' progress in spatial reasoning?

- The author notes that even with improvements, LLMs are still a long way from matching human-level spatial reasoning capabilities.

- Further progress in this area is likely to involve the integration of more specialized models as partners, which brings the challenge of effectively integrating these models with language and reasoning specialists like LLMs.