hackerllama - Sentence Embeddings. Introduction to Sentence Embeddings

🌈 Abstract

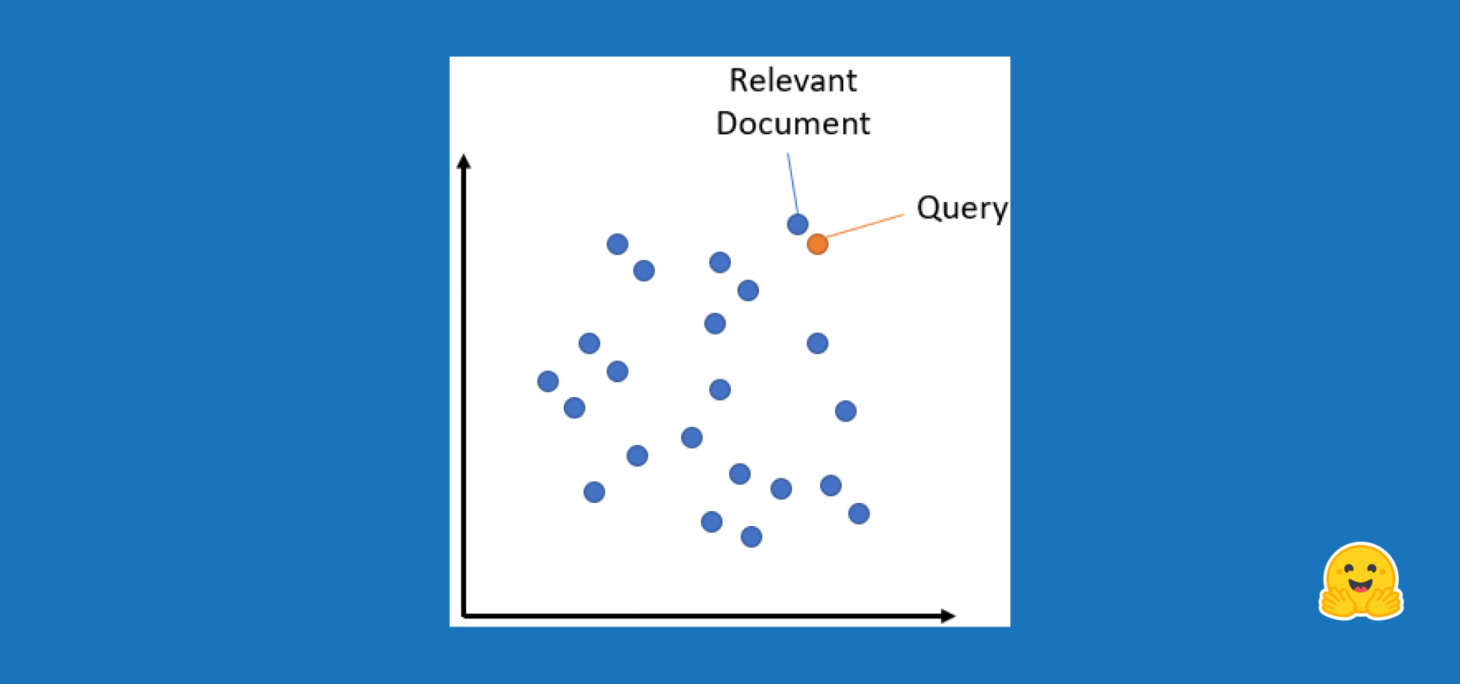

The article provides an introduction to sentence embeddings, which are vector representations of sentences that capture their semantic meaning. It covers the evolution from word embeddings to sentence embeddings, the use of transformer models like BERT for contextual embeddings, and various techniques for computing sentence embeddings such as [CLS] pooling, mean pooling, and max pooling. The article also discusses applications of sentence embeddings, including semantic search, paraphrase mining, and running embedding models in the browser. Finally, it covers the state of the ecosystem around sentence embeddings, including tools, embedding databases, and ongoing research.

🙋 Q&A

[01] From Word Embeddings to Sentence Embeddings

1. What makes transformer models more useful than GloVe or Word2Vec for computing embeddings? Transformer models like BERT can capture contextual information and learn embeddings in the context of specific tasks, unlike the fixed representations of GloVe and Word2Vec. Transformer models use attention mechanisms to weigh the importance of other words, allowing them to better handle words with multiple meanings.

2. What is the role of the [CLS] token in BERT and how does it help for computing sentence embeddings? The [CLS] token is a special token added to the beginning of the sentence by BERT. It is trained to represent the entire sentence, and its embedding can be used as the sentence embedding.

3. What's the difference between pooler_output and the [CLS] token embedding?

The pooler_output is the result of passing the [CLS] token embedding through a linear layer and a tanh activation function, which is intended to learn a better representation of the entire sentence. The raw [CLS] token embedding can also be used directly as the sentence embedding.

4. What's the difference between [CLS] pooling, max pooling, and mean pooling?

- [CLS] pooling uses the embedding of the [CLS] token as the sentence embedding.

- Max pooling takes the maximum value of each dimension across the token embeddings.

- Mean pooling averages the token embeddings to obtain the sentence embedding.

[02] Scaling Up

5. What is the sequence length limitation of transformer models and how can we work around it? Transformer models have a maximum sequence length, typically around 512 tokens. To handle longer inputs, we can split the text into smaller chunks, compute the embeddings for each chunk, and then aggregate the embeddings (e.g., by averaging).

6. When do we need to normalize the embeddings? Normalization is important when the magnitude of the embeddings is meaningful, such as when using dot product as the similarity metric. If the magnitude is not important, as is the case with cosine similarity, normalization may not be necessary.

7. Which two vectors would give a cosine similarity of -1? What about 0?

- Cosine similarity of -1 would be achieved by two vectors that are exactly opposite in direction (180 degrees apart).

- Cosine similarity of 0 would be achieved by two vectors that are perpendicular to each other (90 degrees apart).

8. Explain the different parameters of the paraphrase_mining function.

query_chunk_size: Determines how many sentences are considered as potential paraphrases for each query.corpus_chunk_size: Determines how many chunks of the corpus are being compared simultaneously.top_k: Specifies the number of top matches to return for each query.max_pairs: Limits the total number of pairs returned.

[03] Selecting and Evaluating Models

9. How would you choose the best model for your use case? When choosing a sentence embedding model, consider factors such as:

- Sequence length: Ensure the model can handle the expected length of your input text.

- Language: Pick a model trained on the language(s) you need.

- Embedding dimension: Larger dimensions can capture more information but are more expensive.

- Evaluation metrics: Look at the model's performance on relevant tasks in benchmarks like MTEB.

- Task-specific needs: Some models are specialized for certain applications like scientific papers.